mirror of

https://github.com/alecthomas/chroma.git

synced 2025-01-28 03:29:41 +02:00

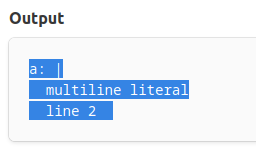

This resolves a particular issue with parsing YAML multiline, for example: ```yaml a: | multiline literal line 2 ``` The regex used would capture the amount of indentation in the third capture group and then use that as a kind of "status" to know which lines are part of the indented multiline. However, because its a captured group it has to be assigned a token which was `TextWhitespace`. This meant that the indentation was outputted after the multiline, technically it should be seen as an non-captured group, but then its no longer to refer to it in the regex. Therefore I've gone with the solution to add a new token, Ignore, which will not be emitted as a token in the iterator, which can safely be used to make use of capture groups but not have them show up in the output. ## Before  ## After

206 lines

5.0 KiB

Go

206 lines

5.0 KiB

Go

package chroma

|

|

|

|

import (

|

|

"testing"

|

|

|

|

assert "github.com/alecthomas/assert/v2"

|

|

)

|

|

|

|

func mustNewLexer(t *testing.T, config *Config, rules Rules) *RegexLexer { // nolint: forbidigo

|

|

lexer, err := NewLexer(config, func() Rules {

|

|

return rules

|

|

})

|

|

assert.NoError(t, err)

|

|

return lexer

|

|

}

|

|

|

|

func TestNewlineAtEndOfFile(t *testing.T) {

|

|

l := Coalesce(mustNewLexer(t, &Config{EnsureNL: true}, Rules{ // nolint: forbidigo

|

|

"root": {

|

|

{`(\w+)(\n)`, ByGroups(Keyword, Whitespace), nil},

|

|

},

|

|

}))

|

|

it, err := l.Tokenise(nil, `hello`)

|

|

assert.NoError(t, err)

|

|

assert.Equal(t, []Token{{Keyword, "hello"}, {Whitespace, "\n"}}, it.Tokens())

|

|

|

|

l = Coalesce(mustNewLexer(t, nil, Rules{ // nolint: forbidigo

|

|

"root": {

|

|

{`(\w+)(\n)`, ByGroups(Keyword, Whitespace), nil},

|

|

},

|

|

}))

|

|

it, err = l.Tokenise(nil, `hello`)

|

|

assert.NoError(t, err)

|

|

assert.Equal(t, []Token{{Error, "hello"}}, it.Tokens())

|

|

}

|

|

|

|

func TestMatchingAtStart(t *testing.T) {

|

|

l := Coalesce(mustNewLexer(t, &Config{}, Rules{ // nolint: forbidigo

|

|

"root": {

|

|

{`\s+`, Whitespace, nil},

|

|

{`^-`, Punctuation, Push("directive")},

|

|

{`->`, Operator, nil},

|

|

},

|

|

"directive": {

|

|

{"module", NameEntity, Pop(1)},

|

|

},

|

|

}))

|

|

it, err := l.Tokenise(nil, `-module ->`)

|

|

assert.NoError(t, err)

|

|

assert.Equal(t,

|

|

[]Token{{Punctuation, "-"}, {NameEntity, "module"}, {Whitespace, " "}, {Operator, "->"}},

|

|

it.Tokens())

|

|

}

|

|

|

|

func TestEnsureLFOption(t *testing.T) {

|

|

l := Coalesce(mustNewLexer(t, &Config{}, Rules{ // nolint: forbidigo

|

|

"root": {

|

|

{`(\w+)(\r?\n|\r)`, ByGroups(Keyword, Whitespace), nil},

|

|

},

|

|

}))

|

|

it, err := l.Tokenise(&TokeniseOptions{

|

|

State: "root",

|

|

EnsureLF: true,

|

|

}, "hello\r\nworld\r")

|

|

assert.NoError(t, err)

|

|

assert.Equal(t, []Token{

|

|

{Keyword, "hello"},

|

|

{Whitespace, "\n"},

|

|

{Keyword, "world"},

|

|

{Whitespace, "\n"},

|

|

}, it.Tokens())

|

|

|

|

l = Coalesce(mustNewLexer(t, nil, Rules{ // nolint: forbidigo

|

|

"root": {

|

|

{`(\w+)(\r?\n|\r)`, ByGroups(Keyword, Whitespace), nil},

|

|

},

|

|

}))

|

|

it, err = l.Tokenise(&TokeniseOptions{

|

|

State: "root",

|

|

EnsureLF: false,

|

|

}, "hello\r\nworld\r")

|

|

assert.NoError(t, err)

|

|

assert.Equal(t, []Token{

|

|

{Keyword, "hello"},

|

|

{Whitespace, "\r\n"},

|

|

{Keyword, "world"},

|

|

{Whitespace, "\r"},

|

|

}, it.Tokens())

|

|

}

|

|

|

|

func TestEnsureLFFunc(t *testing.T) {

|

|

tests := []struct{ in, out string }{

|

|

{in: "", out: ""},

|

|

{in: "abc", out: "abc"},

|

|

{in: "\r", out: "\n"},

|

|

{in: "a\r", out: "a\n"},

|

|

{in: "\rb", out: "\nb"},

|

|

{in: "a\rb", out: "a\nb"},

|

|

{in: "\r\n", out: "\n"},

|

|

{in: "a\r\n", out: "a\n"},

|

|

{in: "\r\nb", out: "\nb"},

|

|

{in: "a\r\nb", out: "a\nb"},

|

|

{in: "\r\r\r\n\r", out: "\n\n\n\n"},

|

|

}

|

|

for _, test := range tests {

|

|

out := ensureLF(test.in)

|

|

assert.Equal(t, out, test.out)

|

|

}

|

|

}

|

|

|

|

func TestByGroupNames(t *testing.T) {

|

|

l := Coalesce(mustNewLexer(t, nil, Rules{ // nolint: forbidigo

|

|

"root": {

|

|

{

|

|

`(?<key>\w+)(?<operator>=)(?<value>\w+)`,

|

|

ByGroupNames(map[string]Emitter{

|

|

`key`: String,

|

|

`operator`: Operator,

|

|

`value`: String,

|

|

}),

|

|

nil,

|

|

},

|

|

},

|

|

}))

|

|

it, err := l.Tokenise(nil, `abc=123`)

|

|

assert.NoError(t, err)

|

|

assert.Equal(t, []Token{{String, `abc`}, {Operator, `=`}, {String, `123`}}, it.Tokens())

|

|

|

|

l = Coalesce(mustNewLexer(t, nil, Rules{ // nolint: forbidigo

|

|

"root": {

|

|

{

|

|

`(?<key>\w+)(?<operator>=)(?<value>\w+)`,

|

|

ByGroupNames(map[string]Emitter{

|

|

`key`: String,

|

|

`value`: String,

|

|

}),

|

|

nil,

|

|

},

|

|

},

|

|

}))

|

|

it, err = l.Tokenise(nil, `abc=123`)

|

|

assert.NoError(t, err)

|

|

assert.Equal(t, []Token{{String, `abc`}, {Error, `=`}, {String, `123`}}, it.Tokens())

|

|

|

|

l = Coalesce(mustNewLexer(t, nil, Rules{ // nolint: forbidigo

|

|

"root": {

|

|

{

|

|

`(?<key>\w+)=(?<value>\w+)`,

|

|

ByGroupNames(map[string]Emitter{

|

|

`key`: String,

|

|

`value`: String,

|

|

}),

|

|

nil,

|

|

},

|

|

},

|

|

}))

|

|

it, err = l.Tokenise(nil, `abc=123`)

|

|

assert.NoError(t, err)

|

|

assert.Equal(t, []Token{{String, `abc123`}}, it.Tokens())

|

|

|

|

l = Coalesce(mustNewLexer(t, nil, Rules{ // nolint: forbidigo

|

|

"root": {

|

|

{

|

|

`(?<key>\w+)(?<op>=)(?<value>\w+)`,

|

|

ByGroupNames(map[string]Emitter{

|

|

`key`: String,

|

|

`operator`: Operator,

|

|

`value`: String,

|

|

}),

|

|

nil,

|

|

},

|

|

},

|

|

}))

|

|

it, err = l.Tokenise(nil, `abc=123`)

|

|

assert.NoError(t, err)

|

|

assert.Equal(t, []Token{{String, `abc`}, {Error, `=`}, {String, `123`}}, it.Tokens())

|

|

|

|

l = Coalesce(mustNewLexer(t, nil, Rules{ // nolint: forbidigo

|

|

"root": {

|

|

{

|

|

`\w+=\w+`,

|

|

ByGroupNames(map[string]Emitter{

|

|

`key`: String,

|

|

`operator`: Operator,

|

|

`value`: String,

|

|

}),

|

|

nil,

|

|

},

|

|

},

|

|

}))

|

|

it, err = l.Tokenise(nil, `abc=123`)

|

|

assert.NoError(t, err)

|

|

assert.Equal(t, []Token{{Error, `abc=123`}}, it.Tokens())

|

|

}

|

|

|

|

func TestIgnoreToken(t *testing.T) {

|

|

l := Coalesce(mustNewLexer(t, &Config{EnsureNL: true}, Rules{ // nolint: forbidigo

|

|

"root": {

|

|

{`(\s*)(\w+)(?:\1)(\n)`, ByGroups(Ignore, Keyword, Whitespace), nil},

|

|

},

|

|

}))

|

|

it, err := l.Tokenise(nil, ` hello `)

|

|

assert.NoError(t, err)

|

|

assert.Equal(t, []Token{{Keyword, "hello"}, {TextWhitespace, "\n"}}, it.Tokens())

|

|

}

|