This PR captures the code coverage from our unit and integration tests. At the moment it simply pushes the result to Codacy, a platform that assists with improving code health. Right now the focus is just getting visibility but I want to experiment with alerts on PRs when a PR causes a drop in code coverage. To be clear: I'm not a dogmatist about this: I have no aspirations to get to 100% code coverage, and I don't consider lines-of-code-covered to be a perfect metric, but it is a pretty good heuristic for how extensive your tests are. The good news is that our coverage is actually pretty good which was a surprise to me! As a conflict of interest statement: I'm in Codacy's 'Pioneers' program which provides funding and mentorship, and part of the arrangement is to use Codacy's tooling on lazygit. This is something I'd have been happy to explore even without being part of the program, and just like with any other static analysis tool, we can tweak it to fit our use case and values. ## How we're capturing code coverage This deserves its own section. Basically when you build the lazygit binary you can specify that you want the binary to capture coverage information when it runs. Then, if you run the binary with a GOCOVERDIR env var, it will write coverage information to that directory before exiting. It's a similar story with unit tests except with those you just specify the directory inline via `-test.gocoverdir`. We run both unit tests and integration tests separately in CI, _and_ we run them parallel with different OS's and git versions. So I've got each step uploading the coverage files as an artefact, and then in a separate step we combine all the artefacts together and generate a combined coverage file, which we then upload to codacy (but in future we can do other things with it like warn in a PR if code coverage decreases too much). Another caveat is that when running integration tests, not only do we want to obtain code coverage from code executed by the test binary, we also want to obtain code coverage from code executed by the test runner. Otherwise, for each integration test you add, the setup code (which is run by the test runner, not the test binary) will be considered un-covered and for a large setup step it may appear that your PR _decreases_ coverage on net. Go doesn't easily let you exclude directories from coverage reports so it's better to just track the coverage from both the runner and the binary. The binary expects a GOCOVERDIR env var but the test runner expects a test.gocoverdir positional arg and if you pass the positional arg it will internally overwrite GOCOVERDIR to some random temp directory and if you then pass that to the test binary, it doesn't seem to actually write to it by the time the test finishes. So to get around that we're using LAZYGIT_GOCOVERDIR and then within the test runner we're mapping that to GOCOVERDIR before running the test binary. So they both end up writing to the same directory. Coverage data files are named to avoid conflicts, including something unique to the process, so we don't need to worry about name collisions between the test runner and the test binary's coverage files. We then merge the files together purely for the sake of having fewer artefacts to upload. ## Misc Initially I was able to have all the instances of '/tmp/code_coverage' confined to the ci.yml which was good because it was all in one place but now it's spread across ci.yml and scripts/run_integration_tests.sh and I don't feel great about that but can't think of a way to make it cleaner. I believe there's a use case for running scripts/run_integration_tests.sh outside of CI (so that you can run tests against older git versions locally) so I've made it that unless you pass the LAZYGIT_GOCOVERDIR env var to that script, it skips all the code coverage stuff. On a separate note: it seems that Go's coverage report is based on percentage of statements executed, whereas codacy cares more about lines of code executed, so codacy reports a higher percentage (e.g. 82%) than Go's own coverage report (74%).

Integration Tests

The pkg/integration package is for integration testing: that is, actually running a real lazygit session and having a robot pretend to be a human user and then making assertions that everything works as expected.

TL;DR: integration tests live in pkg/integration/tests. Run integration tests with:

go run cmd/integration_test/main.go tui

or

go run cmd/integration_test/main.go cli [--slow or --sandbox] [testname or testpath...]

Writing tests

The tests live in pkg/integration/tests. Each test is registered in pkg/integration/tests/test_list.go which is an auto-generated file. You can re-generate that file by running go generate ./... at the root of the Lazygit repo.

Each test has two important steps: the setup step and the run step.

Setup step

In the setup step, we prepare a repo with shell commands, for example, creating a merge conflict that will need to be resolved upon opening lazygit. This is all done via the shell argument.

Run step

The run step has two arguments passed in:

t(the test driver)keys

t is for driving the gui by pressing certain keys, selecting list items, etc.

keys is for use when getting the test to press a particular key e.g. t.Views().Commits().Focus().PressKey(keys.Universal.Confirm)

Running tests

There are three ways to invoke a test:

- go run cmd/integration_test/main.go cli [--slow or --sandbox] [testname or testpath...]

- go run cmd/integration_test/main.go tui

- go test pkg/integration/clients/*.go

The first, the test runner, is for directly running a test from the command line. If you pass no arguments, it runs all tests.

The second, the TUI, is for running tests from a terminal UI where it's easier to find a test and run it without having to copy it's name and paste it into the terminal. This is the easiest approach by far.

The third, the go-test command, intended only for use in CI, to be run along with the other go test tests. This runs the tests in headless mode so there's no visual output.

The name of a test is based on its path, so the name of the test at pkg/integration/tests/commit/new_branch.go is commit/new_branch. So to run it with our test runner you would run go run cmd/integration_test/main.go cli commit/new_branch.

You can pass the INPUT_DELAY env var to the test runner in order to set a delay in milliseconds between keypresses or mouse clicks, which helps for watching a test at a realistic speed to understand what it's doing. Or you can pass the '--slow' flag which sets a pre-set 'slow' key delay. In the tui you can press 't' to run the test in slow mode.

The resultant repo will be stored in test/_results, so if you're not sure what went wrong you can go there and inspect the repo.

Running tests in VSCode

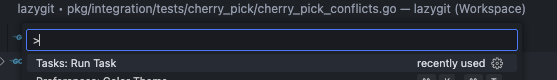

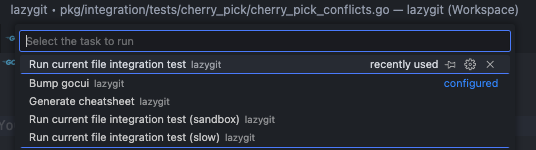

If you've opened an integration test file in your editor you can run that file by bringing up the command panel with cmd+shift+p and typing 'run task', then selecting the test task you want to run

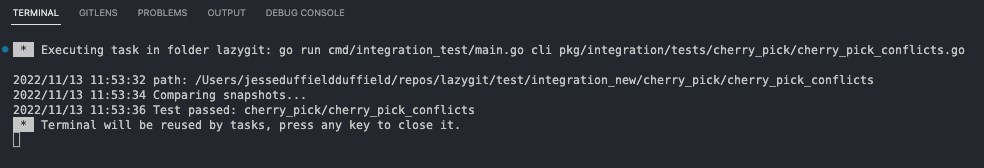

The test will run in a VSCode terminal:

The test will run in a VSCode terminal:

Debugging tests

Debugging an integration test is possible in two ways:

- Use the -debug option of the integration test runner's "cli" command, e.g.

go run cmd/integration_test/main.go cli -debug tag/reset.go - Select a test in the "tui" runner and hit "d" to debug it.

In both cases the test runner will print to the console that it is waiting for a debugger to attach, so now you need to tell your debugger to attach to a running process with the name "test_lazygit". If you are using Visual Studio Code, an easy way to do that is to use the "Attach to integration test runner" debug configuration. The test runner will resume automatically when it detects that a debugger was attached. Don't forget to set a breakpoint in the code that you want to step through, otherwise the test will just finish (i.e. it doesn't stop in the debugger automatically).

Sandbox mode

Say you want to do a manual test of how lazygit handles merge-conflicts, but you can't be bothered actually finding a way to create merge conflicts in a repo. To make your life easier, you can simply run a merge-conflicts test in sandbox mode, meaning the setup step is run for you, and then instead of the test driving the lazygit session, you're allowed to drive it yourself.

To run a test in sandbox mode you can press 's' on a test in the test TUI or in the test runner pass the --sandbox argument.

Tips for writing tests

Handle most setup in the shell part of the test

Try to do as much setup work as possible in your setup step. For example, if all you're testing is that the user is able to resolve merge conflicts, create the merge conflicts in the setup step. On the other hand, if you're testing to see that lazygit can warn the user about merge conflicts after an attempted merge, it's fine to wait until the run step to actually create the conflicts. If the run step is focused on the thing you're trying to test, the test will run faster and its intent will be clearer.

Create helper functions for (very) frequently used test logic

If within a test directory you find several tests need to share some logic, you can create a file called shared.go in that directory to hold shared helper functions (see pkg/integration/tests/filter_by_path/shared.go for an example).

If you need to share test logic across test directories you can put helper functions in the tests/shared package. If you find yourself frequently doing the same thing from within a test across test directories, for example, responding a particular popup, consider adding a helper method to pkg/integration/components/common.go. If you look around the code in the components directory you may find another place that's sensible to put your helper function.

Don't do too much in one test

If you're testing different pieces of functionality, it's better to test them in isolation using multiple short tests, compared to one larger longer test. Sometimes it's appropriate to have a longer test which tests how various different pieces interact, but err on the side of keeping things short.

Testing against old git versions

Our CI tests against multiple git versions. If your test fails on an old version, then to troubleshoot you'll need to install the failing git version. One option is to use rtx (see installation steps in the readme) with the git plugin like so:

rtx plugin add git

rtx install git 2.20.0

rtx local git 2.20.0