mirror of

https://github.com/laurent22/joplin.git

synced 2024-11-24 08:12:24 +02:00

Mobile: Improved Vosk support (beta, fr only) (#8131)

This commit is contained in:

parent

39059ae6bf

commit

5494e8c3dc

@ -74,6 +74,7 @@ packages/turndown/

|

||||

packages/pdf-viewer/dist

|

||||

plugin_types/

|

||||

readme/

|

||||

packages/react-native-vosk/lib/

|

||||

|

||||

# AUTO-GENERATED - EXCLUDED TYPESCRIPT BUILD

|

||||

packages/app-cli/app/LinkSelector.js

|

||||

@ -417,12 +418,15 @@ packages/app-mobile/components/screens/UpgradeSyncTargetScreen.js

|

||||

packages/app-mobile/components/screens/encryption-config.js

|

||||

packages/app-mobile/components/screens/search.js

|

||||

packages/app-mobile/components/side-menu-content.js

|

||||

packages/app-mobile/components/voiceTyping/VoiceTypingDialog.js

|

||||

packages/app-mobile/gulpfile.js

|

||||

packages/app-mobile/root.js

|

||||

packages/app-mobile/services/AlarmServiceDriver.android.js

|

||||

packages/app-mobile/services/AlarmServiceDriver.ios.js

|

||||

packages/app-mobile/services/e2ee/RSA.react-native.js

|

||||

packages/app-mobile/services/profiles/index.js

|

||||

packages/app-mobile/services/voiceTyping/vosk.dummy.js

|

||||

packages/app-mobile/services/voiceTyping/vosk.js

|

||||

packages/app-mobile/setupQuickActions.js

|

||||

packages/app-mobile/tools/buildInjectedJs.js

|

||||

packages/app-mobile/utils/ShareExtension.js

|

||||

@ -807,6 +811,7 @@ packages/plugins/ToggleSidebars/api/index.js

|

||||

packages/plugins/ToggleSidebars/api/types.js

|

||||

packages/plugins/ToggleSidebars/src/index.js

|

||||

packages/react-native-saf-x/src/index.js

|

||||

packages/react-native-vosk/src/index.js

|

||||

packages/renderer/HtmlToHtml.js

|

||||

packages/renderer/InMemoryCache.js

|

||||

packages/renderer/MarkupToHtml.js

|

||||

|

||||

4

.gitignore

vendored

4

.gitignore

vendored

@ -404,12 +404,15 @@ packages/app-mobile/components/screens/UpgradeSyncTargetScreen.js

|

||||

packages/app-mobile/components/screens/encryption-config.js

|

||||

packages/app-mobile/components/screens/search.js

|

||||

packages/app-mobile/components/side-menu-content.js

|

||||

packages/app-mobile/components/voiceTyping/VoiceTypingDialog.js

|

||||

packages/app-mobile/gulpfile.js

|

||||

packages/app-mobile/root.js

|

||||

packages/app-mobile/services/AlarmServiceDriver.android.js

|

||||

packages/app-mobile/services/AlarmServiceDriver.ios.js

|

||||

packages/app-mobile/services/e2ee/RSA.react-native.js

|

||||

packages/app-mobile/services/profiles/index.js

|

||||

packages/app-mobile/services/voiceTyping/vosk.dummy.js

|

||||

packages/app-mobile/services/voiceTyping/vosk.js

|

||||

packages/app-mobile/setupQuickActions.js

|

||||

packages/app-mobile/tools/buildInjectedJs.js

|

||||

packages/app-mobile/utils/ShareExtension.js

|

||||

@ -794,6 +797,7 @@ packages/plugins/ToggleSidebars/api/index.js

|

||||

packages/plugins/ToggleSidebars/api/types.js

|

||||

packages/plugins/ToggleSidebars/src/index.js

|

||||

packages/react-native-saf-x/src/index.js

|

||||

packages/react-native-vosk/src/index.js

|

||||

packages/renderer/HtmlToHtml.js

|

||||

packages/renderer/InMemoryCache.js

|

||||

packages/renderer/MarkupToHtml.js

|

||||

|

||||

@ -15,6 +15,7 @@

|

||||

"@joplin/tools",

|

||||

"@joplin/react-native-saf-x",

|

||||

"@joplin/react-native-alarm-notification",

|

||||

"@joplin/react-native-vosk",

|

||||

"@joplin/utils"

|

||||

]

|

||||

}

|

||||

|

||||

@ -1,15 +0,0 @@

|

||||

diff --git a/android/src/main/java/com/reactnativevosk/VoskModule.kt b/android/src/main/java/com/reactnativevosk/VoskModule.kt

|

||||

index 0e2b6595b1b2cf1ee01c6c64239c4b0ea37fce19..e0daf712a7f2d2c2dd22e623bac6daba7f6a4ac1 100644

|

||||

--- a/android/src/main/java/com/reactnativevosk/VoskModule.kt

|

||||

+++ b/android/src/main/java/com/reactnativevosk/VoskModule.kt

|

||||

@@ -25,7 +25,9 @@ class VoskModule(reactContext: ReactApplicationContext) : ReactContextBaseJavaMo

|

||||

|

||||

// Stop recording if data found

|

||||

if (text != null && text.isNotEmpty()) {

|

||||

- cleanRecognizer();

|

||||

+ // Don't auto-stop the recogniser - we want to do that when the user

|

||||

+ // presses on "stop" only.

|

||||

+ // cleanRecognizer();

|

||||

sendEvent("onResult", text)

|

||||

}

|

||||

}

|

||||

@ -96,7 +96,6 @@

|

||||

"packageManager": "yarn@3.3.1",

|

||||

"resolutions": {

|

||||

"react-native-camera@4.2.1": "patch:react-native-camera@npm%3A4.2.1#./.yarn/patches/react-native-camera-npm-4.2.1-24b2600a7e.patch",

|

||||

"rn-fetch-blob@0.12.0": "patch:rn-fetch-blob@npm%3A0.12.0#./.yarn/patches/rn-fetch-blob-npm-0.12.0-cf02e3c544.patch",

|

||||

"react-native-vosk@0.1.12": "patch:react-native-vosk@npm%3A0.1.12#./.yarn/patches/react-native-vosk-npm-0.1.12-76b1caaae8.patch"

|

||||

"rn-fetch-blob@0.12.0": "patch:rn-fetch-blob@npm%3A0.12.0#./.yarn/patches/rn-fetch-blob-npm-0.12.0-cf02e3c544.patch"

|

||||

}

|

||||

}

|

||||

|

||||

1

packages/app-mobile/.gitignore

vendored

1

packages/app-mobile/.gitignore

vendored

@ -72,3 +72,4 @@ components/NoteEditor/CodeMirror/CodeMirror.bundle.min.js

|

||||

components/NoteEditor/**/*.bundle.js.md5

|

||||

|

||||

utils/fs-driver-android.js

|

||||

android/app/build-*

|

||||

|

||||

@ -29,7 +29,7 @@ import ScreenHeader from '../ScreenHeader';

|

||||

const NoteTagsDialog = require('./NoteTagsDialog');

|

||||

import time from '@joplin/lib/time';

|

||||

const { Checkbox } = require('../checkbox.js');

|

||||

const { _ } = require('@joplin/lib/locale');

|

||||

import { _, currentLocale } from '@joplin/lib/locale';

|

||||

import { reg } from '@joplin/lib/registry';

|

||||

import ResourceFetcher from '@joplin/lib/services/ResourceFetcher';

|

||||

const { BaseScreenComponent } = require('../base-screen.js');

|

||||

@ -44,7 +44,8 @@ import ShareExtension from '../../utils/ShareExtension.js';

|

||||

import CameraView from '../CameraView';

|

||||

import { NoteEntity } from '@joplin/lib/services/database/types';

|

||||

import Logger from '@joplin/lib/Logger';

|

||||

import Vosk from 'react-native-vosk';

|

||||

import VoiceTypingDialog from '../voiceTyping/VoiceTypingDialog';

|

||||

import { voskEnabled } from '../../services/voiceTyping/vosk';

|

||||

const urlUtils = require('@joplin/lib/urlUtils');

|

||||

|

||||

const emptyArray: any[] = [];

|

||||

@ -53,9 +54,6 @@ const logger = Logger.create('screens/Note');

|

||||

|

||||

class NoteScreenComponent extends BaseScreenComponent {

|

||||

|

||||

private vosk_: Vosk|null = null;

|

||||

private voskResult_: string[] = [];

|

||||

|

||||

public static navigationOptions(): any {

|

||||

return { header: null };

|

||||

}

|

||||

@ -89,6 +87,8 @@ class NoteScreenComponent extends BaseScreenComponent {

|

||||

canUndo: false,

|

||||

canRedo: false,

|

||||

},

|

||||

|

||||

voiceTypingDialogShown: false,

|

||||

};

|

||||

|

||||

this.saveActionQueues_ = {};

|

||||

@ -243,6 +243,8 @@ class NoteScreenComponent extends BaseScreenComponent {

|

||||

this.onBodyViewerCheckboxChange = this.onBodyViewerCheckboxChange.bind(this);

|

||||

this.onBodyChange = this.onBodyChange.bind(this);

|

||||

this.onUndoRedoDepthChange = this.onUndoRedoDepthChange.bind(this);

|

||||

this.voiceTypingDialog_onText = this.voiceTypingDialog_onText.bind(this);

|

||||

this.voiceTypingDialog_onDismiss = this.voiceTypingDialog_onDismiss.bind(this);

|

||||

}

|

||||

|

||||

private useEditorBeta(): boolean {

|

||||

@ -759,65 +761,6 @@ class NoteScreenComponent extends BaseScreenComponent {

|

||||

}

|

||||

}

|

||||

|

||||

private async getVosk() {

|

||||

if (this.vosk_) return this.vosk_;

|

||||

this.vosk_ = new Vosk();

|

||||

await this.vosk_.loadModel('model-fr-fr');

|

||||

return this.vosk_;

|

||||

}

|

||||

|

||||

private async voiceRecording_onPress() {

|

||||

logger.info('Vosk: Getting instance...');

|

||||

|

||||

const vosk = await this.getVosk();

|

||||

|

||||

this.voskResult_ = [];

|

||||

|

||||

const eventHandlers: any[] = [];

|

||||

|

||||

eventHandlers.push(vosk.onResult(e => {

|

||||

logger.info('Vosk: result', e.data);

|

||||

this.voskResult_.push(e.data);

|

||||

}));

|

||||

|

||||

eventHandlers.push(vosk.onError(e => {

|

||||

logger.warn('Vosk: error', e.data);

|

||||

}));

|

||||

|

||||

eventHandlers.push(vosk.onTimeout(e => {

|

||||

logger.warn('Vosk: timeout', e.data);

|

||||

}));

|

||||

|

||||

eventHandlers.push(vosk.onFinalResult(e => {

|

||||

logger.info('Vosk: final result', e.data);

|

||||

}));

|

||||

|

||||

logger.info('Vosk: Starting recording...');

|

||||

|

||||

void vosk.start();

|

||||

|

||||

const buttonId = await dialogs.pop(this, 'Voice recording in progress...', [

|

||||

{ text: 'Stop recording', id: 'stop' },

|

||||

{ text: _('Cancel'), id: 'cancel' },

|

||||

]);

|

||||

|

||||

logger.info('Vosk: Stopping recording...');

|

||||

vosk.stop();

|

||||

|

||||

for (const eventHandler of eventHandlers) {

|

||||

eventHandler.remove();

|

||||

}

|

||||

|

||||

logger.info('Vosk: Recording stopped:', this.voskResult_);

|

||||

|

||||

if (buttonId === 'cancel') return;

|

||||

|

||||

const newNote: NoteEntity = { ...this.state.note };

|

||||

newNote.body = `${newNote.body} ${this.voskResult_.join(' ')}`;

|

||||

this.setState({ note: newNote });

|

||||

this.scheduleSave();

|

||||

}

|

||||

|

||||

private toggleIsTodo_onPress() {

|

||||

shared.toggleIsTodo_onPress(this);

|

||||

|

||||

@ -979,11 +922,13 @@ class NoteScreenComponent extends BaseScreenComponent {

|

||||

},

|

||||

});

|

||||

|

||||

if (shim.mobilePlatform() === 'android') {

|

||||

// Voice typing is enabled only for French language and on Android for now

|

||||

if (voskEnabled && shim.mobilePlatform() === 'android' && currentLocale() === 'fr_FR') {

|

||||

output.push({

|

||||

title: 'Voice recording (Beta - FR only)',

|

||||

title: _('Voice typing...'),

|

||||

onPress: () => {

|

||||

void this.voiceRecording_onPress();

|

||||

this.voiceRecording_onPress();

|

||||

// this.setState({ voiceTypingDialogShown: true });

|

||||

},

|

||||

});

|

||||

}

|

||||

@ -1107,6 +1052,25 @@ class NoteScreenComponent extends BaseScreenComponent {

|

||||

void this.saveOneProperty('body', newBody);

|

||||

}

|

||||

|

||||

private voiceTypingDialog_onText(text: string) {

|

||||

if (this.state.mode === 'view') {

|

||||

const newNote: NoteEntity = { ...this.state.note };

|

||||

newNote.body = `${newNote.body} ${text}`;

|

||||

this.setState({ note: newNote });

|

||||

this.scheduleSave();

|

||||

} else {

|

||||

if (this.useEditorBeta()) {

|

||||

this.editorRef.current.insertText(text);

|

||||

} else {

|

||||

logger.warn('Voice typing is not supported in plaintext editor');

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

private voiceTypingDialog_onDismiss() {

|

||||

this.setState({ voiceTypingDialogShown: false });

|

||||

}

|

||||

|

||||

public render() {

|

||||

if (this.state.isLoading) {

|

||||

return (

|

||||

@ -1262,6 +1226,11 @@ class NoteScreenComponent extends BaseScreenComponent {

|

||||

|

||||

const noteTagDialog = !this.state.noteTagDialogShown ? null : <NoteTagsDialog onCloseRequested={this.noteTagDialog_closeRequested} />;

|

||||

|

||||

const renderVoiceTypingDialog = () => {

|

||||

if (!this.state.voiceTypingDialogShown) return null;

|

||||

return <VoiceTypingDialog onText={this.voiceTypingDialog_onText} onDismiss={this.voiceTypingDialog_onDismiss}/>;

|

||||

};

|

||||

|

||||

return (

|

||||

<View style={this.rootStyle(this.props.themeId).root}>

|

||||

<ScreenHeader

|

||||

@ -1290,6 +1259,7 @@ class NoteScreenComponent extends BaseScreenComponent {

|

||||

}}

|

||||

/>

|

||||

{noteTagDialog}

|

||||

{renderVoiceTypingDialog()}

|

||||

</View>

|

||||

);

|

||||

}

|

||||

@ -1312,6 +1282,10 @@ const NoteScreen = connect((state: any) => {

|

||||

showSideMenu: state.showSideMenu,

|

||||

provisionalNoteIds: state.provisionalNoteIds,

|

||||

highlightedWords: state.highlightedWords,

|

||||

|

||||

// What we call "beta editor" in this component is actually the (now

|

||||

// default) CodeMirror editor. That should be refactored to make it less

|

||||

// confusing.

|

||||

useEditorBeta: !state.settings['editor.usePlainText'],

|

||||

};

|

||||

})(NoteScreenComponent);

|

||||

|

||||

@ -0,0 +1,99 @@

|

||||

import * as React from 'react';

|

||||

import { useState, useEffect, useCallback } from 'react';

|

||||

import { Button, Dialog, Text } from 'react-native-paper';

|

||||

import { _ } from '@joplin/lib/locale';

|

||||

import useAsyncEffect, { AsyncEffectEvent } from '@joplin/lib/hooks/useAsyncEffect';

|

||||

import { getVosk, Recorder, startRecording, Vosk } from '../../services/voiceTyping/vosk';

|

||||

import { Alert } from 'react-native';

|

||||

|

||||

interface Props {

|

||||

onDismiss: ()=> void;

|

||||

onText: (text: string)=> void;

|

||||

}

|

||||

|

||||

enum RecorderState {

|

||||

Loading = 1,

|

||||

Recording = 2,

|

||||

Processing = 3,

|

||||

}

|

||||

|

||||

const useVosk = (): Vosk|null => {

|

||||

const [vosk, setVosk] = useState<Vosk>(null);

|

||||

|

||||

useAsyncEffect(async (event: AsyncEffectEvent) => {

|

||||

const v = await getVosk();

|

||||

if (event.cancelled) return;

|

||||

setVosk(v);

|

||||

}, []);

|

||||

|

||||

return vosk;

|

||||

};

|

||||

|

||||

export default (props: Props) => {

|

||||

const [recorder, setRecorder] = useState<Recorder>(null);

|

||||

const [recorderState, setRecorderState] = useState<RecorderState>(RecorderState.Loading);

|

||||

|

||||

const vosk = useVosk();

|

||||

|

||||

useEffect(() => {

|

||||

if (!vosk) return;

|

||||

setRecorderState(RecorderState.Recording);

|

||||

}, [vosk]);

|

||||

|

||||

useEffect(() => {

|

||||

if (recorderState === RecorderState.Recording) {

|

||||

setRecorder(startRecording(vosk));

|

||||

}

|

||||

}, [recorderState, vosk]);

|

||||

|

||||

const onDismiss = useCallback(() => {

|

||||

recorder.cleanup();

|

||||

props.onDismiss();

|

||||

}, [recorder, props.onDismiss]);

|

||||

|

||||

const onStop = useCallback(async () => {

|

||||

try {

|

||||

setRecorderState(RecorderState.Processing);

|

||||

const result = await recorder.stop();

|

||||

props.onText(result);

|

||||

} catch (error) {

|

||||

Alert.alert(error.message);

|

||||

}

|

||||

onDismiss();

|

||||

}, [recorder, onDismiss, props.onText]);

|

||||

|

||||

const renderContent = () => {

|

||||

const components: Record<RecorderState, any> = {

|

||||

[RecorderState.Loading]: <Text variant="bodyMedium">Loading...</Text>,

|

||||

[RecorderState.Recording]: <Text variant="bodyMedium">Please record your voice...</Text>,

|

||||

[RecorderState.Processing]: <Text variant="bodyMedium">Converting speech to text...</Text>,

|

||||

};

|

||||

|

||||

return components[recorderState];

|

||||

};

|

||||

|

||||

const renderActions = () => {

|

||||

const components: Record<RecorderState, any> = {

|

||||

[RecorderState.Loading]: null,

|

||||

[RecorderState.Recording]: (

|

||||

<Dialog.Actions>

|

||||

<Button onPress={onDismiss}>Cancel</Button>

|

||||

<Button onPress={onStop}>Done</Button>

|

||||

</Dialog.Actions>

|

||||

),

|

||||

[RecorderState.Processing]: null,

|

||||

};

|

||||

|

||||

return components[recorderState];

|

||||

};

|

||||

|

||||

return (

|

||||

<Dialog visible={true} onDismiss={props.onDismiss}>

|

||||

<Dialog.Title>{_('Voice typing')}</Dialog.Title>

|

||||

<Dialog.Content>

|

||||

{renderContent()}

|

||||

</Dialog.Content>

|

||||

{renderActions()}

|

||||

</Dialog>

|

||||

);

|

||||

};

|

||||

@ -346,7 +346,7 @@ PODS:

|

||||

- React-Core

|

||||

- react-native-version-info (1.1.1):

|

||||

- React-Core

|

||||

- react-native-vosk (0.1.12):

|

||||

- react-native-vosk (0.1.13):

|

||||

- React-Core

|

||||

- react-native-webview (11.26.1):

|

||||

- React-Core

|

||||

@ -507,7 +507,7 @@ DEPENDENCIES:

|

||||

- "react-native-slider (from `../node_modules/@react-native-community/slider`)"

|

||||

- react-native-sqlite-storage (from `../node_modules/react-native-sqlite-storage`)

|

||||

- react-native-version-info (from `../node_modules/react-native-version-info`)

|

||||

- react-native-vosk (from `../node_modules/react-native-vosk`)

|

||||

- "react-native-vosk (from `../node_modules/@joplin/react-native-vosk`)"

|

||||

- react-native-webview (from `../node_modules/react-native-webview`)

|

||||

- React-perflogger (from `../node_modules/react-native/ReactCommon/reactperflogger`)

|

||||

- React-RCTActionSheet (from `../node_modules/react-native/Libraries/ActionSheetIOS`)

|

||||

@ -630,7 +630,7 @@ EXTERNAL SOURCES:

|

||||

react-native-version-info:

|

||||

:path: "../node_modules/react-native-version-info"

|

||||

react-native-vosk:

|

||||

:path: "../node_modules/react-native-vosk"

|

||||

:path: "../node_modules/@joplin/react-native-vosk"

|

||||

react-native-webview:

|

||||

:path: "../node_modules/react-native-webview"

|

||||

React-perflogger:

|

||||

@ -734,7 +734,7 @@ SPEC CHECKSUMS:

|

||||

react-native-slider: 33b8d190b59d4f67a541061bb91775d53d617d9d

|

||||

react-native-sqlite-storage: f6d515e1c446d1e6d026aa5352908a25d4de3261

|

||||

react-native-version-info: a106f23009ac0db4ee00de39574eb546682579b9

|

||||

react-native-vosk: 33b8e82a46cc56f31bb4847a40efa2d160270e2e

|

||||

react-native-vosk: 2e94a03a6b9a45c512b22810ac5ac111d0925bcb

|

||||

react-native-webview: 9f111dfbcfc826084d6c507f569e5e03342ee1c1

|

||||

React-perflogger: 8c79399b0500a30ee8152d0f9f11beae7fc36595

|

||||

React-RCTActionSheet: 7316773acabb374642b926c19aef1c115df5c466

|

||||

|

||||

@ -24,6 +24,7 @@ const localPackages = {

|

||||

'@joplin/fork-uslug': path.resolve(__dirname, '../fork-uslug/'),

|

||||

'@joplin/react-native-saf-x': path.resolve(__dirname, '../react-native-saf-x/'),

|

||||

'@joplin/react-native-alarm-notification': path.resolve(__dirname, '../react-native-alarm-notification/'),

|

||||

'@joplin/react-native-vosk': path.resolve(__dirname, '../react-native-vosk/'),

|

||||

};

|

||||

|

||||

const watchedFolders = [];

|

||||

|

||||

@ -21,6 +21,7 @@

|

||||

"@joplin/lib": "~2.11",

|

||||

"@joplin/react-native-alarm-notification": "~2.11",

|

||||

"@joplin/react-native-saf-x": "~2.11",

|

||||

"@joplin/react-native-vosk": "~0.1",

|

||||

"@joplin/renderer": "~2.11",

|

||||

"@react-native-community/clipboard": "1.5.1",

|

||||

"@react-native-community/datetimepicker": "6.7.5",

|

||||

@ -66,7 +67,6 @@

|

||||

"react-native-url-polyfill": "1.3.0",

|

||||

"react-native-vector-icons": "9.2.0",

|

||||

"react-native-version-info": "1.1.1",

|

||||

"react-native-vosk": "0.1.12",

|

||||

"react-native-webview": "11.26.1",

|

||||

"react-redux": "7.2.9",

|

||||

"redux": "4.2.1",

|

||||

|

||||

21

packages/app-mobile/services/voiceTyping/vosk.dummy.ts

Normal file

21

packages/app-mobile/services/voiceTyping/vosk.dummy.ts

Normal file

@ -0,0 +1,21 @@

|

||||

type Vosk = any;

|

||||

|

||||

export { Vosk };

|

||||

|

||||

export interface Recorder {

|

||||

stop: ()=> Promise<string>;

|

||||

cleanup: ()=> void;

|

||||

}

|

||||

|

||||

export const getVosk = async () => {

|

||||

return {} as any;

|

||||

};

|

||||

|

||||

export const startRecording = (_vosk: Vosk): Recorder => {

|

||||

return {

|

||||

stop: async () => { return ''; },

|

||||

cleanup: () => {},

|

||||

};

|

||||

};

|

||||

|

||||

export const voskEnabled = false;

|

||||

113

packages/app-mobile/services/voiceTyping/vosk.ts

Normal file

113

packages/app-mobile/services/voiceTyping/vosk.ts

Normal file

@ -0,0 +1,113 @@

|

||||

import Logger from '@joplin/lib/Logger';

|

||||

import Vosk from '@joplin/react-native-vosk';

|

||||

const logger = Logger.create('voiceTyping/vosk');

|

||||

|

||||

enum State {

|

||||

Idle = 0,

|

||||

Recording,

|

||||

}

|

||||

|

||||

let vosk_: Vosk|null = null;

|

||||

let state_: State = State.Idle;

|

||||

|

||||

export const voskEnabled = true;

|

||||

|

||||

export { Vosk };

|

||||

|

||||

export interface Recorder {

|

||||

stop: ()=> Promise<string>;

|

||||

cleanup: ()=> void;

|

||||

}

|

||||

|

||||

export const getVosk = async () => {

|

||||

if (vosk_) return vosk_;

|

||||

vosk_ = new Vosk();

|

||||

await vosk_.loadModel('model-fr-fr');

|

||||

return vosk_;

|

||||

};

|

||||

|

||||

export const startRecording = (vosk: Vosk): Recorder => {

|

||||

if (state_ !== State.Idle) throw new Error('Vosk is already recording');

|

||||

|

||||

state_ = State.Recording;

|

||||

|

||||

const result: string[] = [];

|

||||

const eventHandlers: any[] = [];

|

||||

let finalResultPromiseResolve: Function = null;

|

||||

let finalResultPromiseReject: Function = null;

|

||||

let finalResultTimeout = false;

|

||||

|

||||

const completeRecording = (finalResult: string, error: Error) => {

|

||||

logger.info(`Complete recording. Final result: ${finalResult}. Error:`, error);

|

||||

|

||||

for (const eventHandler of eventHandlers) {

|

||||

eventHandler.remove();

|

||||

}

|

||||

|

||||

vosk.cleanup(),

|

||||

|

||||

state_ = State.Idle;

|

||||

|

||||

if (error) {

|

||||

if (finalResultPromiseReject) finalResultPromiseReject(error);

|

||||

} else {

|

||||

if (finalResultPromiseResolve) finalResultPromiseResolve(finalResult);

|

||||

}

|

||||

};

|

||||

|

||||

eventHandlers.push(vosk.onResult(e => {

|

||||

logger.info('Result', e.data);

|

||||

result.push(e.data);

|

||||

}));

|

||||

|

||||

eventHandlers.push(vosk.onError(e => {

|

||||

logger.warn('Error', e.data);

|

||||

}));

|

||||

|

||||

eventHandlers.push(vosk.onTimeout(e => {

|

||||

logger.warn('Timeout', e.data);

|

||||

}));

|

||||

|

||||

eventHandlers.push(vosk.onFinalResult(e => {

|

||||

logger.info('Final result', e.data);

|

||||

|

||||

if (finalResultTimeout) {

|

||||

logger.warn('Got final result - but already timed out. Not doing anything.');

|

||||

return;

|

||||

}

|

||||

|

||||

completeRecording(e.data, null);

|

||||

}));

|

||||

|

||||

logger.info('Starting recording...');

|

||||

|

||||

void vosk.start();

|

||||

|

||||

return {

|

||||

stop: (): Promise<string> => {

|

||||

logger.info('Stopping recording...');

|

||||

|

||||

vosk.stopOnly();

|

||||

|

||||

logger.info('Waiting for final result...');

|

||||

|

||||

setTimeout(() => {

|

||||

finalResultTimeout = true;

|

||||

logger.warn('Timed out waiting for finalResult event');

|

||||

completeRecording('', new Error('Could not process your message. Please try again.'));

|

||||

}, 5000);

|

||||

|

||||

return new Promise((resolve: Function, reject: Function) => {

|

||||

finalResultPromiseResolve = resolve;

|

||||

finalResultPromiseReject = reject;

|

||||

});

|

||||

},

|

||||

cleanup: () => {

|

||||

if (state_ !== State.Idle) {

|

||||

logger.info('Cancelling...');

|

||||

vosk.stopOnly();

|

||||

completeRecording('', null);

|

||||

}

|

||||

},

|

||||

};

|

||||

};

|

||||

@ -42,3 +42,5 @@ local.properties

|

||||

buck-out/

|

||||

\.buckd/

|

||||

*.keystore

|

||||

|

||||

android/build-*

|

||||

4

packages/react-native-saf-x/.gitignore

vendored

4

packages/react-native-saf-x/.gitignore

vendored

@ -63,4 +63,6 @@ android/keystores/debug.keystore

|

||||

# generated by bob

|

||||

lib/

|

||||

.env

|

||||

docs

|

||||

docs

|

||||

|

||||

android/build-*

|

||||

98

packages/react-native-vosk/.circleci/config.yml

Normal file

98

packages/react-native-vosk/.circleci/config.yml

Normal file

@ -0,0 +1,98 @@

|

||||

version: 2.1

|

||||

|

||||

executors:

|

||||

default:

|

||||

docker:

|

||||

- image: circleci/node:16

|

||||

working_directory: ~/project

|

||||

|

||||

commands:

|

||||

attach_project:

|

||||

steps:

|

||||

- attach_workspace:

|

||||

at: ~/project

|

||||

|

||||

jobs:

|

||||

install-dependencies:

|

||||

executor: default

|

||||

steps:

|

||||

- checkout

|

||||

- attach_project

|

||||

- restore_cache:

|

||||

keys:

|

||||

- dependencies-{{ checksum "package.json" }}

|

||||

- dependencies-

|

||||

- restore_cache:

|

||||

keys:

|

||||

- dependencies-example-{{ checksum "example/package.json" }}

|

||||

- dependencies-example-

|

||||

- run:

|

||||

name: Install dependencies

|

||||

command: |

|

||||

yarn install --cwd example --frozen-lockfile

|

||||

yarn install --frozen-lockfile

|

||||

- save_cache:

|

||||

key: dependencies-{{ checksum "package.json" }}

|

||||

paths: node_modules

|

||||

- save_cache:

|

||||

key: dependencies-example-{{ checksum "example/package.json" }}

|

||||

paths: example/node_modules

|

||||

- persist_to_workspace:

|

||||

root: .

|

||||

paths: .

|

||||

|

||||

lint:

|

||||

executor: default

|

||||

steps:

|

||||

- attach_project

|

||||

- run:

|

||||

name: Lint files

|

||||

command: |

|

||||

yarn lint

|

||||

|

||||

typescript:

|

||||

executor: default

|

||||

steps:

|

||||

- attach_project

|

||||

- run:

|

||||

name: Typecheck files

|

||||

command: |

|

||||

yarn typescript

|

||||

|

||||

unit-tests:

|

||||

executor: default

|

||||

steps:

|

||||

- attach_project

|

||||

- run:

|

||||

name: Run unit tests

|

||||

command: |

|

||||

yarn test --coverage

|

||||

- store_artifacts:

|

||||

path: coverage

|

||||

destination: coverage

|

||||

|

||||

build-package:

|

||||

executor: default

|

||||

steps:

|

||||

- attach_project

|

||||

- run:

|

||||

name: Build package

|

||||

command: |

|

||||

yarn prepare

|

||||

|

||||

workflows:

|

||||

build-and-test:

|

||||

jobs:

|

||||

- install-dependencies

|

||||

- lint:

|

||||

requires:

|

||||

- install-dependencies

|

||||

- typescript:

|

||||

requires:

|

||||

- install-dependencies

|

||||

- unit-tests:

|

||||

requires:

|

||||

- install-dependencies

|

||||

- build-package:

|

||||

requires:

|

||||

- install-dependencies

|

||||

4

packages/react-native-vosk/.gitattributes

vendored

Normal file

4

packages/react-native-vosk/.gitattributes

vendored

Normal file

@ -0,0 +1,4 @@

|

||||

*.pbxproj -text

|

||||

# specific for windows script files

|

||||

*.bat text eol=crlf

|

||||

*.a filter=lfs diff=lfs merge=lfs -text

|

||||

22

packages/react-native-vosk/.github/workflows/npm-publish.yml

vendored

Normal file

22

packages/react-native-vosk/.github/workflows/npm-publish.yml

vendored

Normal file

@ -0,0 +1,22 @@

|

||||

# This workflow will run tests using node and then publish a package to GitHub Packages when a release is created

|

||||

# For more information see: https://help.github.com/actions/language-and-framework-guides/publishing-nodejs-packages

|

||||

|

||||

name: Node.js Package

|

||||

|

||||

on:

|

||||

release:

|

||||

types: [created]

|

||||

|

||||

jobs:

|

||||

publish-npm:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/setup-node@v3

|

||||

with:

|

||||

node-version: 16

|

||||

registry-url: https://registry.npmjs.org/

|

||||

- run: yarn

|

||||

- run: npm publish

|

||||

env:

|

||||

NODE_AUTH_TOKEN: ${{secrets.NPM_TOKEN}}

|

||||

72

packages/react-native-vosk/.gitignore

vendored

Normal file

72

packages/react-native-vosk/.gitignore

vendored

Normal file

@ -0,0 +1,72 @@

|

||||

# OSX

|

||||

#

|

||||

.DS_Store

|

||||

|

||||

# XDE

|

||||

.expo/

|

||||

|

||||

# VSCode

|

||||

.vscode/

|

||||

jsconfig.json

|

||||

|

||||

# Xcode

|

||||

#

|

||||

build/

|

||||

*.pbxuser

|

||||

!default.pbxuser

|

||||

*.mode1v3

|

||||

!default.mode1v3

|

||||

*.mode2v3

|

||||

!default.mode2v3

|

||||

*.perspectivev3

|

||||

!default.perspectivev3

|

||||

xcuserdata

|

||||

*.xccheckout

|

||||

*.moved-aside

|

||||

DerivedData

|

||||

*.hmap

|

||||

*.ipa

|

||||

*.xcuserstate

|

||||

ios/.xcode.env.local

|

||||

project.xcworkspace

|

||||

|

||||

# Android/IJ

|

||||

#

|

||||

.classpath

|

||||

.cxx

|

||||

.gradle

|

||||

.idea

|

||||

.project

|

||||

.settings

|

||||

local.properties

|

||||

android.iml

|

||||

.cxx/

|

||||

*.keystore

|

||||

!debug.keystore

|

||||

|

||||

# Cocoapods

|

||||

#

|

||||

example/ios/Pods

|

||||

|

||||

# Ruby

|

||||

example/vendor/

|

||||

|

||||

# Temporary files created by Metro to check the health of the file watcher

|

||||

.metro-health-check*

|

||||

|

||||

# node.js

|

||||

#

|

||||

node_modules/

|

||||

npm-debug.log

|

||||

yarn-debug.log

|

||||

yarn-error.log

|

||||

|

||||

# Expo

|

||||

.expo/*

|

||||

|

||||

# generated by bob

|

||||

# lib/

|

||||

|

||||

# Generated by UUID

|

||||

example/android/app/src/main/assets/*/uuid

|

||||

android/build-*

|

||||

1

packages/react-native-vosk/.watchmanconfig

Normal file

1

packages/react-native-vosk/.watchmanconfig

Normal file

@ -0,0 +1 @@

|

||||

{}

|

||||

3

packages/react-native-vosk/.yarnrc

Normal file

3

packages/react-native-vosk/.yarnrc

Normal file

@ -0,0 +1,3 @@

|

||||

# Override Yarn command so we can automatically setup the repo on running `yarn`

|

||||

|

||||

yarn-path "scripts/bootstrap.js"

|

||||

195

packages/react-native-vosk/CONTRIBUTING.md

Normal file

195

packages/react-native-vosk/CONTRIBUTING.md

Normal file

@ -0,0 +1,195 @@

|

||||

# Contributing

|

||||

|

||||

We want this community to be friendly and respectful to each other. Please follow it in all your interactions with the project.

|

||||

|

||||

## Development workflow

|

||||

|

||||

To get started with the project, run `yarn` in the root directory to install the required dependencies for each package:

|

||||

|

||||

```sh

|

||||

yarn

|

||||

```

|

||||

|

||||

> While it's possible to use [`npm`](https://github.com/npm/cli), the tooling is built around [`yarn`](https://classic.yarnpkg.com/), so you'll have an easier time if you use `yarn` for development.

|

||||

|

||||

While developing, you can run the [example app](/example/) to test your changes. Any changes you make in your library's JavaScript code will be reflected in the example app without a rebuild. If you change any native code, then you'll need to rebuild the example app.

|

||||

|

||||

To start the packager:

|

||||

|

||||

```sh

|

||||

yarn example start

|

||||

```

|

||||

|

||||

To run the example app on Android:

|

||||

|

||||

```sh

|

||||

yarn example android

|

||||

```

|

||||

|

||||

To run the example app on iOS:

|

||||

|

||||

```sh

|

||||

yarn example ios

|

||||

```

|

||||

|

||||

|

||||

Make sure your code passes TypeScript and ESLint. Run the following to verify:

|

||||

|

||||

```sh

|

||||

yarn typescript

|

||||

yarn lint

|

||||

```

|

||||

|

||||

To fix formatting errors, run the following:

|

||||

|

||||

```sh

|

||||

yarn lint --fix

|

||||

```

|

||||

|

||||

Remember to add tests for your change if possible. Run the unit tests by:

|

||||

|

||||

```sh

|

||||

yarn test

|

||||

```

|

||||

To edit the Objective-C files, open `example/ios/VoskExample.xcworkspace` in XCode and find the source files at `Pods > Development Pods > react-native-vosk`.

|

||||

|

||||

To edit the Kotlin files, open `example/android` in Android studio and find the source files at `reactnativevosk` under `Android`.

|

||||

### Commit message convention

|

||||

|

||||

We follow the [conventional commits specification](https://www.conventionalcommits.org/en) for our commit messages:

|

||||

|

||||

- `fix`: bug fixes, e.g. fix crash due to deprecated method.

|

||||

- `feat`: new features, e.g. add new method to the module.

|

||||

- `refactor`: code refactor, e.g. migrate from class components to hooks.

|

||||

- `docs`: changes into documentation, e.g. add usage example for the module..

|

||||

- `test`: adding or updating tests, e.g. add integration tests using detox.

|

||||

- `chore`: tooling changes, e.g. change CI config.

|

||||

|

||||

Our pre-commit hooks verify that your commit message matches this format when committing.

|

||||

|

||||

### Linting and tests

|

||||

|

||||

[ESLint](https://eslint.org/), [Prettier](https://prettier.io/), [TypeScript](https://www.typescriptlang.org/)

|

||||

|

||||

We use [TypeScript](https://www.typescriptlang.org/) for type checking, [ESLint](https://eslint.org/) with [Prettier](https://prettier.io/) for linting and formatting the code, and [Jest](https://jestjs.io/) for testing.

|

||||

|

||||

Our pre-commit hooks verify that the linter and tests pass when committing.

|

||||

|

||||

### Publishing to npm

|

||||

|

||||

We use [release-it](https://github.com/release-it/release-it) to make it easier to publish new versions. It handles common tasks like bumping version based on semver, creating tags and releases etc.

|

||||

|

||||

To publish new versions, run the following:

|

||||

|

||||

```sh

|

||||

yarn release

|

||||

```

|

||||

|

||||

### Scripts

|

||||

|

||||

The `package.json` file contains various scripts for common tasks:

|

||||

|

||||

- `yarn bootstrap`: setup project by installing all dependencies and pods.

|

||||

- `yarn typescript`: type-check files with TypeScript.

|

||||

- `yarn lint`: lint files with ESLint.

|

||||

- `yarn test`: run unit tests with Jest.

|

||||

- `yarn example start`: start the Metro server for the example app.

|

||||

- `yarn example android`: run the example app on Android.

|

||||

- `yarn example ios`: run the example app on iOS.

|

||||

|

||||

### Sending a pull request

|

||||

|

||||

> **Working on your first pull request?** You can learn how from this _free_ series: [How to Contribute to an Open Source Project on GitHub](https://app.egghead.io/playlists/how-to-contribute-to-an-open-source-project-on-github).

|

||||

|

||||

When you're sending a pull request:

|

||||

|

||||

- Prefer small pull requests focused on one change.

|

||||

- Verify that linters and tests are passing.

|

||||

- Review the documentation to make sure it looks good.

|

||||

- Follow the pull request template when opening a pull request.

|

||||

- For pull requests that change the API or implementation, discuss with maintainers first by opening an issue.

|

||||

|

||||

## Code of Conduct

|

||||

|

||||

### Our Pledge

|

||||

|

||||

We as members, contributors, and leaders pledge to make participation in our community a harassment-free experience for everyone, regardless of age, body size, visible or invisible disability, ethnicity, sex characteristics, gender identity and expression, level of experience, education, socio-economic status, nationality, personal appearance, race, religion, or sexual identity and orientation.

|

||||

|

||||

We pledge to act and interact in ways that contribute to an open, welcoming, diverse, inclusive, and healthy community.

|

||||

|

||||

### Our Standards

|

||||

|

||||

Examples of behavior that contributes to a positive environment for our community include:

|

||||

|

||||

- Demonstrating empathy and kindness toward other people

|

||||

- Being respectful of differing opinions, viewpoints, and experiences

|

||||

- Giving and gracefully accepting constructive feedback

|

||||

- Accepting responsibility and apologizing to those affected by our mistakes, and learning from the experience

|

||||

- Focusing on what is best not just for us as individuals, but for the overall community

|

||||

|

||||

Examples of unacceptable behavior include:

|

||||

|

||||

- The use of sexualized language or imagery, and sexual attention or

|

||||

advances of any kind

|

||||

- Trolling, insulting or derogatory comments, and personal or political attacks

|

||||

- Public or private harassment

|

||||

- Publishing others' private information, such as a physical or email

|

||||

address, without their explicit permission

|

||||

- Other conduct which could reasonably be considered inappropriate in a

|

||||

professional setting

|

||||

|

||||

### Enforcement Responsibilities

|

||||

|

||||

Community leaders are responsible for clarifying and enforcing our standards of acceptable behavior and will take appropriate and fair corrective action in response to any behavior that they deem inappropriate, threatening, offensive, or harmful.

|

||||

|

||||

Community leaders have the right and responsibility to remove, edit, or reject comments, commits, code, wiki edits, issues, and other contributions that are not aligned to this Code of Conduct, and will communicate reasons for moderation decisions when appropriate.

|

||||

|

||||

### Scope

|

||||

|

||||

This Code of Conduct applies within all community spaces, and also applies when an individual is officially representing the community in public spaces. Examples of representing our community include using an official e-mail address, posting via an official social media account, or acting as an appointed representative at an online or offline event.

|

||||

|

||||

### Enforcement

|

||||

|

||||

Instances of abusive, harassing, or otherwise unacceptable behavior may be reported to the community leaders responsible for enforcement at [INSERT CONTACT METHOD]. All complaints will be reviewed and investigated promptly and fairly.

|

||||

|

||||

All community leaders are obligated to respect the privacy and security of the reporter of any incident.

|

||||

|

||||

### Enforcement Guidelines

|

||||

|

||||

Community leaders will follow these Community Impact Guidelines in determining the consequences for any action they deem in violation of this Code of Conduct:

|

||||

|

||||

#### 1. Correction

|

||||

|

||||

**Community Impact**: Use of inappropriate language or other behavior deemed unprofessional or unwelcome in the community.

|

||||

|

||||

**Consequence**: A private, written warning from community leaders, providing clarity around the nature of the violation and an explanation of why the behavior was inappropriate. A public apology may be requested.

|

||||

|

||||

#### 2. Warning

|

||||

|

||||

**Community Impact**: A violation through a single incident or series of actions.

|

||||

|

||||

**Consequence**: A warning with consequences for continued behavior. No interaction with the people involved, including unsolicited interaction with those enforcing the Code of Conduct, for a specified period of time. This includes avoiding interactions in community spaces as well as external channels like social media. Violating these terms may lead to a temporary or permanent ban.

|

||||

|

||||

#### 3. Temporary Ban

|

||||

|

||||

**Community Impact**: A serious violation of community standards, including sustained inappropriate behavior.

|

||||

|

||||

**Consequence**: A temporary ban from any sort of interaction or public communication with the community for a specified period of time. No public or private interaction with the people involved, including unsolicited interaction with those enforcing the Code of Conduct, is allowed during this period. Violating these terms may lead to a permanent ban.

|

||||

|

||||

#### 4. Permanent Ban

|

||||

|

||||

**Community Impact**: Demonstrating a pattern of violation of community standards, including sustained inappropriate behavior, harassment of an individual, or aggression toward or disparagement of classes of individuals.

|

||||

|

||||

**Consequence**: A permanent ban from any sort of public interaction within the community.

|

||||

|

||||

### Attribution

|

||||

|

||||

This Code of Conduct is adapted from the [Contributor Covenant][homepage], version 2.0,

|

||||

available at https://www.contributor-covenant.org/version/2/0/code_of_conduct.html.

|

||||

|

||||

Community Impact Guidelines were inspired by [Mozilla's code of conduct enforcement ladder](https://github.com/mozilla/diversity).

|

||||

|

||||

[homepage]: https://www.contributor-covenant.org

|

||||

|

||||

For answers to common questions about this code of conduct, see the FAQ at

|

||||

https://www.contributor-covenant.org/faq. Translations are available at https://www.contributor-covenant.org/translations.

|

||||

20

packages/react-native-vosk/LICENSE

Normal file

20

packages/react-native-vosk/LICENSE

Normal file

@ -0,0 +1,20 @@

|

||||

MIT License

|

||||

|

||||

Copyright (c) 2022 Joris Gaudin

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

in the Software without restriction, including without limitation the rights

|

||||

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

123

packages/react-native-vosk/README.md

Normal file

123

packages/react-native-vosk/README.md

Normal file

@ -0,0 +1,123 @@

|

||||

# react-native-vosk - React ASR (Automated Speech Recognition)

|

||||

|

||||

* * *

|

||||

|

||||

**Joplin fork** of `react-native-vosk@0.1.12` with the following changes:

|

||||

|

||||

- The `onResult()` event doesn't automatically stop the recorder - because we need it to keep running so that it captures the whole text. The original package was designed to record one keyword, but we need whole sentences.

|

||||

- Added the `stopOnly()` method. This is because the original `stop()` method wouldn't just stop, but clear everything, this preventing the useful `onFinalResult()` event from event from being emitted. And we need this event to get the final text.

|

||||

- Also added `cleanup()` method. It should be called once the `onFinalResult()` event has been received, and does the same as the original `stop()` method.

|

||||

- The folder in `ios/Vosk/vosk-model-spk-0.4` was deleted because unclear what it's for, and we don't build the iOS version anyway. If it's ever needed it can be restored from the original repo: https://github.com/riderodd/react-native-vosk

|

||||

|

||||

* * *

|

||||

|

||||

Speech recognition module for react native using [Vosk](https://github.com/alphacep/vosk-api) library

|

||||

|

||||

## Installation

|

||||

|

||||

### Library

|

||||

```sh

|

||||

npm install -S react-native-vosk

|

||||

```

|

||||

|

||||

### Models

|

||||

Vosk uses prebuilt models to perform speech recognition offline. You have to download the model(s) that you need on [Vosk official website](https://alphacephei.com/vosk/models)

|

||||

Avoid using too heavy models, because the computation time required to load them into your app could lead to bad user experience.

|

||||

Then, unzip the model in your app folder. If you just need to use the iOS version, put the model folder wherever you want, and import it as described below. If you need both iOS and Android to work, you can avoid to copy the model twice for both projects by importing the model from the Android assets folder in XCode. Just do as follow:

|

||||

|

||||

### Android

|

||||

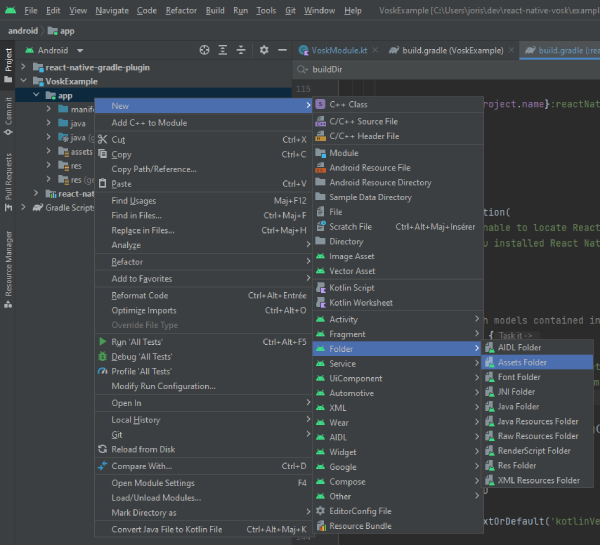

In Android Studio, open the project manager, right-click on your project folder and New > Folder > Assets folder.

|

||||

|

||||

|

||||

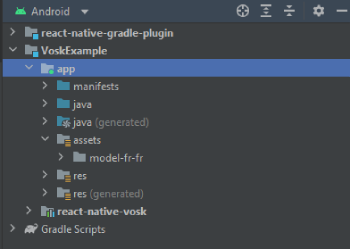

Then put the model folder inside the assets folder created. In your file tree it should be located in android\app\src\main\assets. So, if you downloaded the french model named model-fr-fr, you should access the model by going to android\app\src\main\assets\model-fr-fr. In Android studio, your project structure should be like that:

|

||||

|

||||

|

||||

You can import as many models as you want.

|

||||

|

||||

### iOS

|

||||

In order to let the project work, you're going to need the iOS library. Mail contact@alphacephei.com to get the libraries. You're going to have a libvosk.xcframework file (or folder for not mac users), just copy it in the ios folder of the module (node_modules/react-native-vosk/ios/libvosk.xcframework). Then run in your root project:

|

||||

```sh

|

||||

npm run pods

|

||||

```

|

||||

|

||||

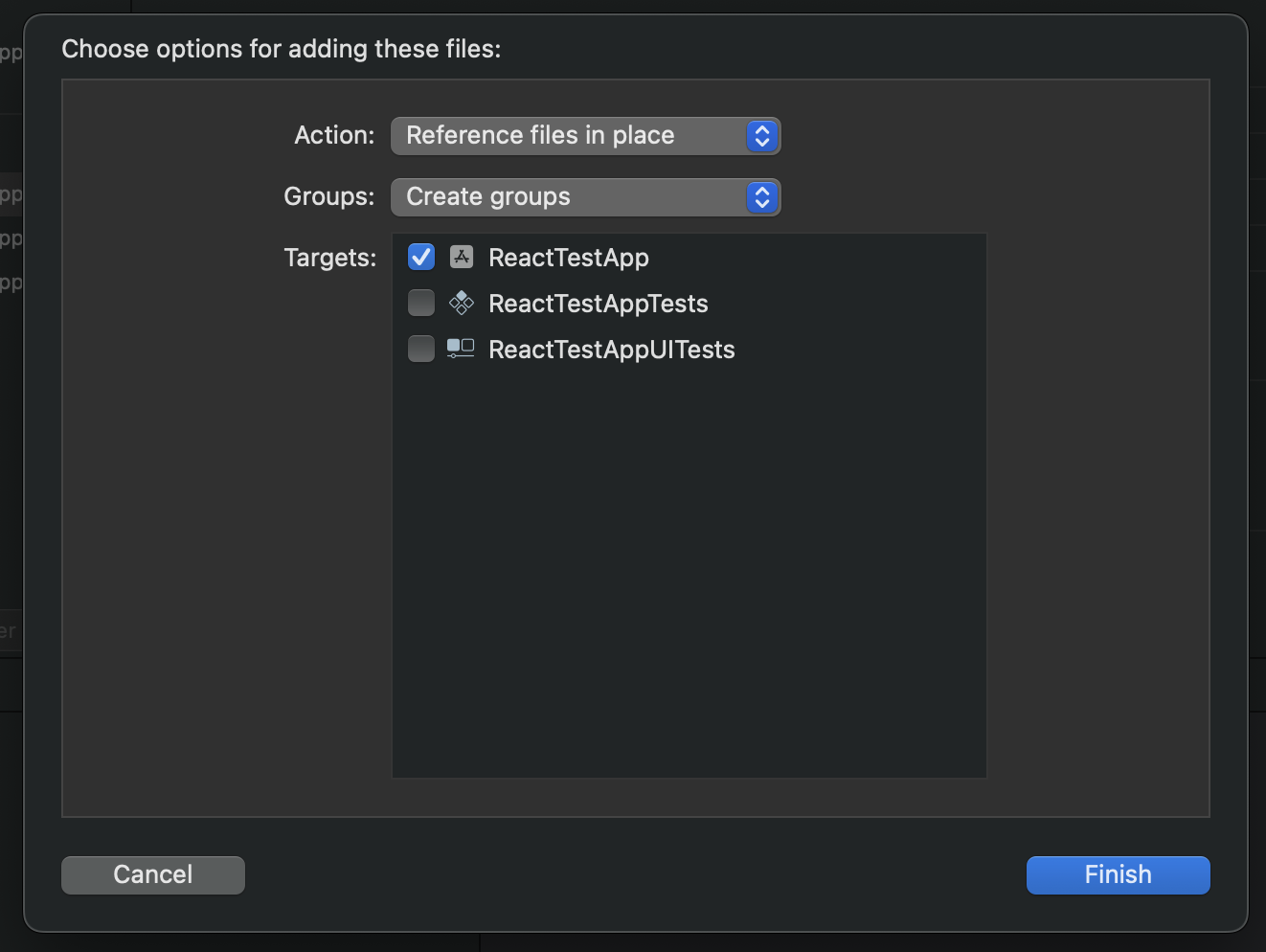

In XCode, right-click on your project folder, and click on "Add files to [your project name]".

|

||||

|

||||

|

||||

|

||||

Then navigate to your model folder. You can navigate to your Android assets folder as mentionned before, and chose your model here. It will avoid to have the model copied twice in your project. If you don't use the Android build, you can just put the model wherever you want, and select it.

|

||||

|

||||

|

||||

|

||||

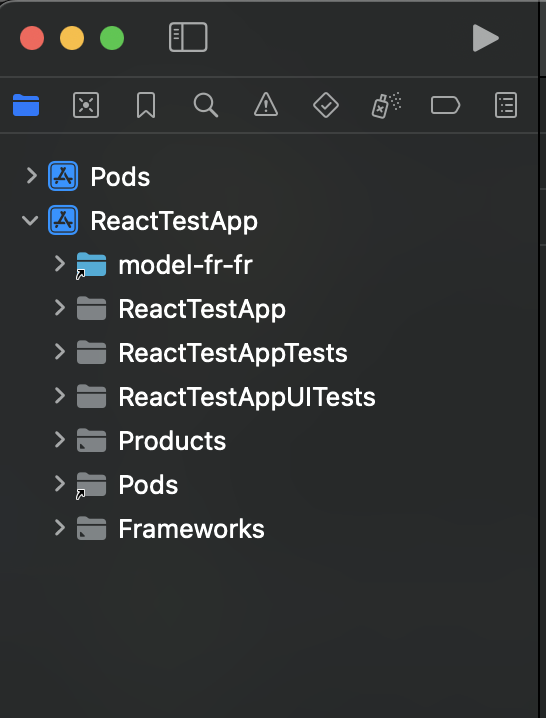

That's all. The model folder should appear in your project. When you click on it, your project target should be checked (see below).

|

||||

|

||||

|

||||

|

||||

## Usage

|

||||

|

||||

```js

|

||||

import VoiceRecognition from 'react-native-voice-recognition';

|

||||

|

||||

// ...

|

||||

|

||||

const voiceRecognition = new VoiceRecognition();

|

||||

|

||||

voiceRecognition.loadModel('model-fr-fr').then(() => {

|

||||

// we can use promise...

|

||||

voiceRecognition

|

||||

.start()

|

||||

.then((res: any) => {

|

||||

console.log('Result is: ' + res);

|

||||

})

|

||||

|

||||

// ... or events

|

||||

const resultEvent = vosk.onResult((res) => {

|

||||

console.log('A onResult event has been caught: ' + res.data);

|

||||

});

|

||||

|

||||

// Don't forget to call resultEvent.remove(); when needed

|

||||

}).catch(e => {

|

||||

console.error(e);

|

||||

})

|

||||

|

||||

```

|

||||

|

||||

Note that `start()` method will ask for audio record permission.

|

||||

|

||||

[Complete example...](https://github.com/riderodd/react-native-vosk/blob/main/example/src/App.tsx)

|

||||

|

||||

### Methods

|

||||

|

||||

| Method | Argument | Return | Description |

|

||||

|---|---|---|---|

|

||||

| `loadModel` | `path: string` | `Promise` | Loads the voice model used for recognition, it is required before using start method |

|

||||

| `start` | `grammar: string[]` or `none` | `Promise` | Starts the voice recognition and returns the recognized text as a promised string, you can recognize specific words using the `grammar` argument (ex: ["left", "right"]) according to kaldi's documentation |

|

||||

| `stop` | `none` | `none` | Stops the recognition |

|

||||

|

||||

### Events

|

||||

|

||||

| Method | Promise return | Description |

|

||||

|---|---|---|

|

||||

| `onResult` | The recognized word as a `string` | Triggers on voice recognition result |

|

||||

| `onFinalResult` | The recognized word as a `string` | Triggers if stopped using `stop()` method |

|

||||

| `onError` | The error that occured as a `string` or `exception` | Triggers if an error occured |

|

||||

| `onTimeout` | "timeout" `string` | Triggers on timeout |

|

||||

|

||||

#### Example

|

||||

|

||||

```js

|

||||

const resultEvent = voiceRecognition.onResult((res) => {

|

||||

console.log('A onResult event has been caught: ' + res.data);

|

||||

});

|

||||

|

||||

resultEvent.remove();

|

||||

```

|

||||

|

||||

Don't forget to remove the event listener once you don't need it anymore.

|

||||

|

||||

## Contributing

|

||||

|

||||

See the [contributing guide](CONTRIBUTING.md) to learn how to contribute to the repository and the development workflow.

|

||||

|

||||

## License

|

||||

|

||||

MIT

|

||||

107

packages/react-native-vosk/android/build.gradle

Normal file

107

packages/react-native-vosk/android/build.gradle

Normal file

@ -0,0 +1,107 @@

|

||||

buildscript {

|

||||

// Buildscript is evaluated before everything else so we can't use getExtOrDefault

|

||||

def kotlin_version = rootProject.ext.has("kotlinVersion") ? rootProject.ext.get("kotlinVersion") : project.properties["Vosk_kotlinVersion"]

|

||||

|

||||

repositories {

|

||||

google()

|

||||

mavenCentral()

|

||||

}

|

||||

|

||||

dependencies {

|

||||

classpath "com.android.tools.build:gradle:7.2.1"

|

||||

// noinspection DifferentKotlinGradleVersion

|

||||

classpath "org.jetbrains.kotlin:kotlin-gradle-plugin:$kotlin_version"

|

||||

}

|

||||

}

|

||||

|

||||

def isNewArchitectureEnabled() {

|

||||

return rootProject.hasProperty("newArchEnabled") && rootProject.getProperty("newArchEnabled") == "true"

|

||||

}

|

||||

|

||||

apply plugin: "com.android.library"

|

||||

apply plugin: "kotlin-android"

|

||||

|

||||

|

||||

def appProject = rootProject.allprojects.find { it.plugins.hasPlugin('com.android.application') }

|

||||

|

||||

if (isNewArchitectureEnabled()) {

|

||||

apply plugin: "com.facebook.react"

|

||||

}

|

||||

|

||||

def getExtOrDefault(name) {

|

||||

return rootProject.ext.has(name) ? rootProject.ext.get(name) : project.properties["Vosk_" + name]

|

||||

}

|

||||

|

||||

def getExtOrIntegerDefault(name) {

|

||||

return rootProject.ext.has(name) ? rootProject.ext.get(name) : (project.properties["Vosk_" + name]).toInteger()

|

||||

}

|

||||

|

||||

android {

|

||||

compileSdkVersion getExtOrIntegerDefault('compileSdkVersion')

|

||||

|

||||

defaultConfig {

|

||||

minSdkVersion getExtOrIntegerDefault("minSdkVersion")

|

||||

targetSdkVersion getExtOrIntegerDefault("targetSdkVersion")

|

||||

buildConfigField "boolean", "IS_NEW_ARCHITECTURE_ENABLED", isNewArchitectureEnabled().toString()

|

||||

}

|

||||

buildTypes {

|

||||

release {

|

||||

minifyEnabled false

|

||||

}

|

||||

}

|

||||

|

||||

lintOptions {

|

||||

disable 'GradleCompatible'

|

||||

}

|

||||

|

||||

compileOptions {

|

||||

sourceCompatibility JavaVersion.VERSION_1_8

|

||||

targetCompatibility JavaVersion.VERSION_1_8

|

||||

}

|

||||

|

||||

}

|

||||

|

||||

repositories {

|

||||

mavenCentral()

|

||||

google()

|

||||

}

|

||||

|

||||

// Generate UUIDs for each models contained in android/src/main/assets/

|

||||

|

||||

// We don't want this because it's going to generate a different one on each

|

||||

// build, even when nothing has changed.

|

||||

|

||||

// tasks.register('genUUID') {

|

||||

// doLast {

|

||||

// fileTree(dir: "$rootDir/app/src/main/assets", exclude: ['*/*']).visit { fileDetails ->

|

||||

// if (fileDetails.directory) {

|

||||

// def odir = file("$rootDir/app/src/main/assets/$fileDetails.relativePath")

|

||||

// def ofile = file("$odir/uuid")

|

||||

// mkdir odir

|

||||

// ofile.text = UUID.randomUUID().toString()

|

||||

// }

|

||||

// }

|

||||

// }

|

||||

// }

|

||||

// preBuild.dependsOn genUUID

|

||||

|

||||

def kotlin_version = getExtOrDefault('kotlinVersion')

|

||||

|

||||

dependencies {

|

||||

// For < 0.71, this will be from the local maven repo

|

||||

// For > 0.71, this will be replaced by `com.facebook.react:react-android:$version` by react gradle plugin

|

||||

//noinspection GradleDynamicVersion

|

||||

implementation "com.facebook.react:react-native:+"

|

||||

implementation "org.jetbrains.kotlin:kotlin-stdlib:$kotlin_version"

|

||||

// From node_modules

|

||||

implementation 'net.java.dev.jna:jna:5.12.1@aar'

|

||||

implementation 'com.alphacephei:vosk-android:0.3.46@aar'

|

||||

}

|

||||

|

||||

if (isNewArchitectureEnabled()) {

|

||||

react {

|

||||

jsRootDir = file("../src/")

|

||||

libraryName = "Vosk"

|

||||

codegenJavaPackageName = "com.reactnativevosk"

|

||||

}

|

||||

}

|

||||

5

packages/react-native-vosk/android/gradle.properties

Normal file

5

packages/react-native-vosk/android/gradle.properties

Normal file

@ -0,0 +1,5 @@

|

||||

Vosk_kotlinVersion=1.7.0

|

||||

Vosk_minSdkVersion=21

|

||||

Vosk_targetSdkVersion=31

|

||||

Vosk_compileSdkVersion=31

|

||||

Vosk_ndkversion=21.4.7075529

|

||||

@ -0,0 +1,4 @@

|

||||

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

|

||||

package="com.reactnativevosk">

|

||||

<uses-permission android:name="android.permission.RECORD_AUDIO" />

|

||||

</manifest>

|

||||

@ -0,0 +1,192 @@

|

||||

package com.reactnativevosk

|

||||

import com.facebook.react.bridge.*

|

||||

import com.facebook.react.modules.core.DeviceEventManagerModule

|

||||

import org.json.JSONObject

|

||||

import org.vosk.Model

|

||||

import org.vosk.Recognizer

|

||||

import org.vosk.android.RecognitionListener

|

||||

import org.vosk.android.SpeechService

|

||||

import org.vosk.android.StorageService

|

||||

import java.io.IOException

|

||||

|

||||

class VoskModule(reactContext: ReactApplicationContext) : ReactContextBaseJavaModule(reactContext), RecognitionListener {

|

||||

private var model: Model? = null

|

||||

private var speechService: SpeechService? = null

|

||||

private var context: ReactApplicationContext? = reactContext

|

||||

private var recognizer: Recognizer? = null

|

||||

|

||||

override fun getName(): String {

|

||||

return "Vosk"

|

||||

}

|

||||

|

||||

@ReactMethod

|

||||

fun addListener(type: String?) {

|

||||

// Keep: Required for RN built in Event Emitter Calls.

|

||||

}

|

||||

|

||||

@ReactMethod

|

||||

fun removeListeners(type: Int?) {

|

||||

// Keep: Required for RN built in Event Emitter Calls.

|

||||

}

|

||||

|

||||

override fun onResult(hypothesis: String) {

|

||||

// Get text data from string object

|

||||

val text = getHypothesisText(hypothesis)

|

||||

|

||||

// Stop recording if data found

|

||||

if (text != null && text.isNotEmpty()) {

|

||||

// Don't auto-stop the recogniser - we want to do that when the user

|

||||

// presses on "stop" only.

|

||||

// cleanRecognizer();

|

||||

sendEvent("onResult", text)

|

||||

}

|

||||

}

|

||||

|

||||

override fun onFinalResult(hypothesis: String) {

|

||||

val text = getHypothesisText(hypothesis)

|

||||

if (text!!.isNotEmpty()) sendEvent("onFinalResult", text)

|

||||

}

|

||||

|

||||

override fun onPartialResult(hypothesis: String) {

|

||||

sendEvent("onPartialResult", hypothesis)

|

||||

}

|

||||

|

||||

override fun onError(e: Exception) {

|

||||

sendEvent("onError", e.toString())

|

||||

}

|

||||

|

||||

override fun onTimeout() {

|

||||

sendEvent("onTimeout")

|

||||

}

|

||||

|

||||

/**

|

||||

* Converts hypothesis json text to the recognized text

|

||||

* @return the recognized text or null if something went wrong

|

||||

*/

|

||||

private fun getHypothesisText(hypothesis: String): String? {

|

||||

// Hypothesis is in the form: '{text: "recognized text"}'

|

||||

return try {

|

||||

val res = JSONObject(hypothesis)

|

||||

res.getString("text")

|

||||

} catch (tx: Throwable) {

|

||||

null

|

||||

}

|

||||

}

|

||||

|

||||

/**

|

||||

* Sends event to react native with associated data

|

||||

*/

|

||||

private fun sendEvent(eventName: String, data: String? = null) {

|

||||

// Write event data if there is some

|

||||

val event = Arguments.createMap().apply {

|

||||

if (data != null) putString("data", data)

|

||||

}

|

||||

|

||||

// Send event

|

||||

context?.getJSModule(DeviceEventManagerModule.RCTDeviceEventEmitter::class.java)?.emit(

|

||||

eventName,

|

||||

event

|

||||

)

|

||||

}

|

||||

|

||||

/**

|

||||

* Translates array of string(s) to required kaldi string format

|

||||

* @return the array of string(s) as a single string

|

||||

*/

|